Application

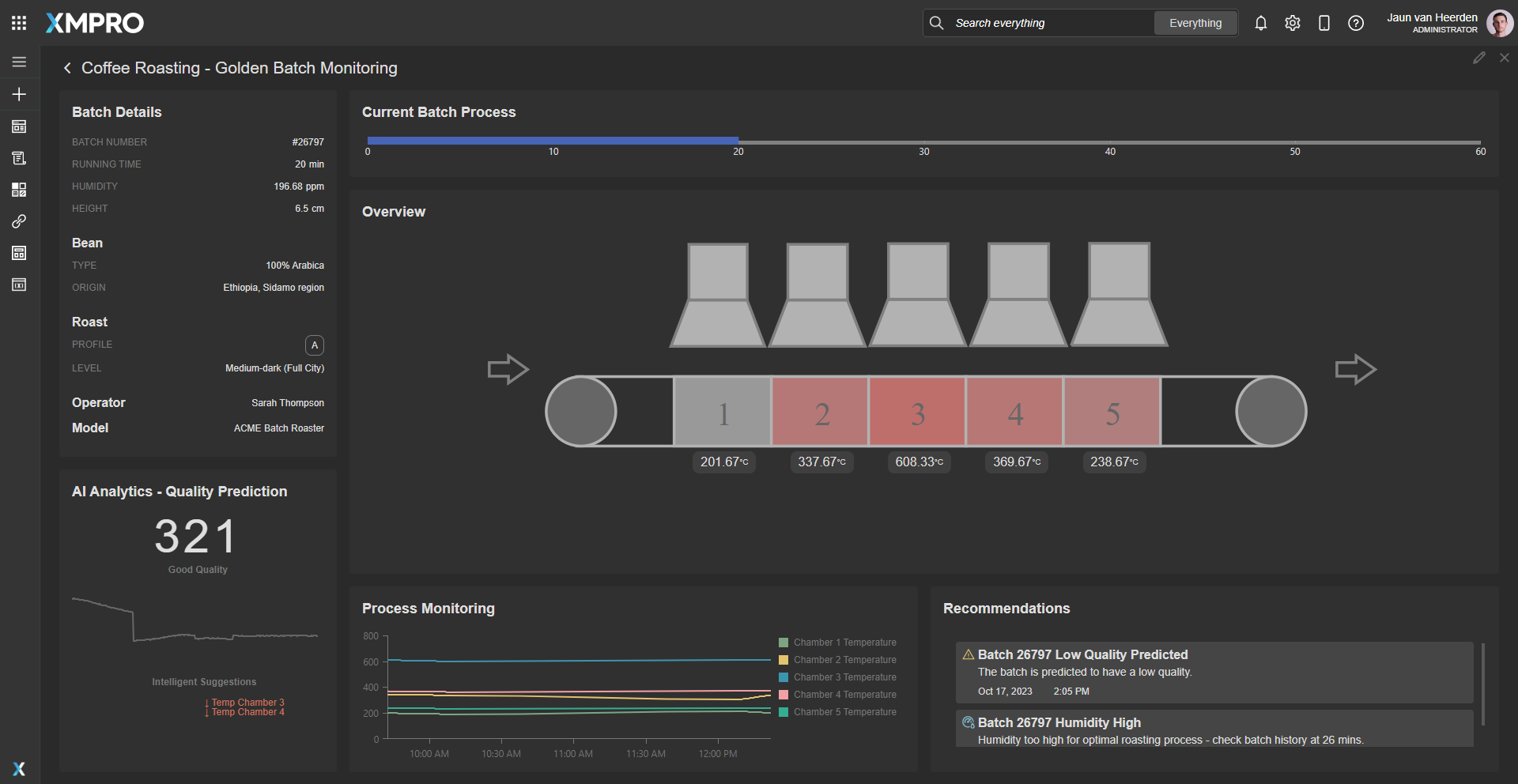

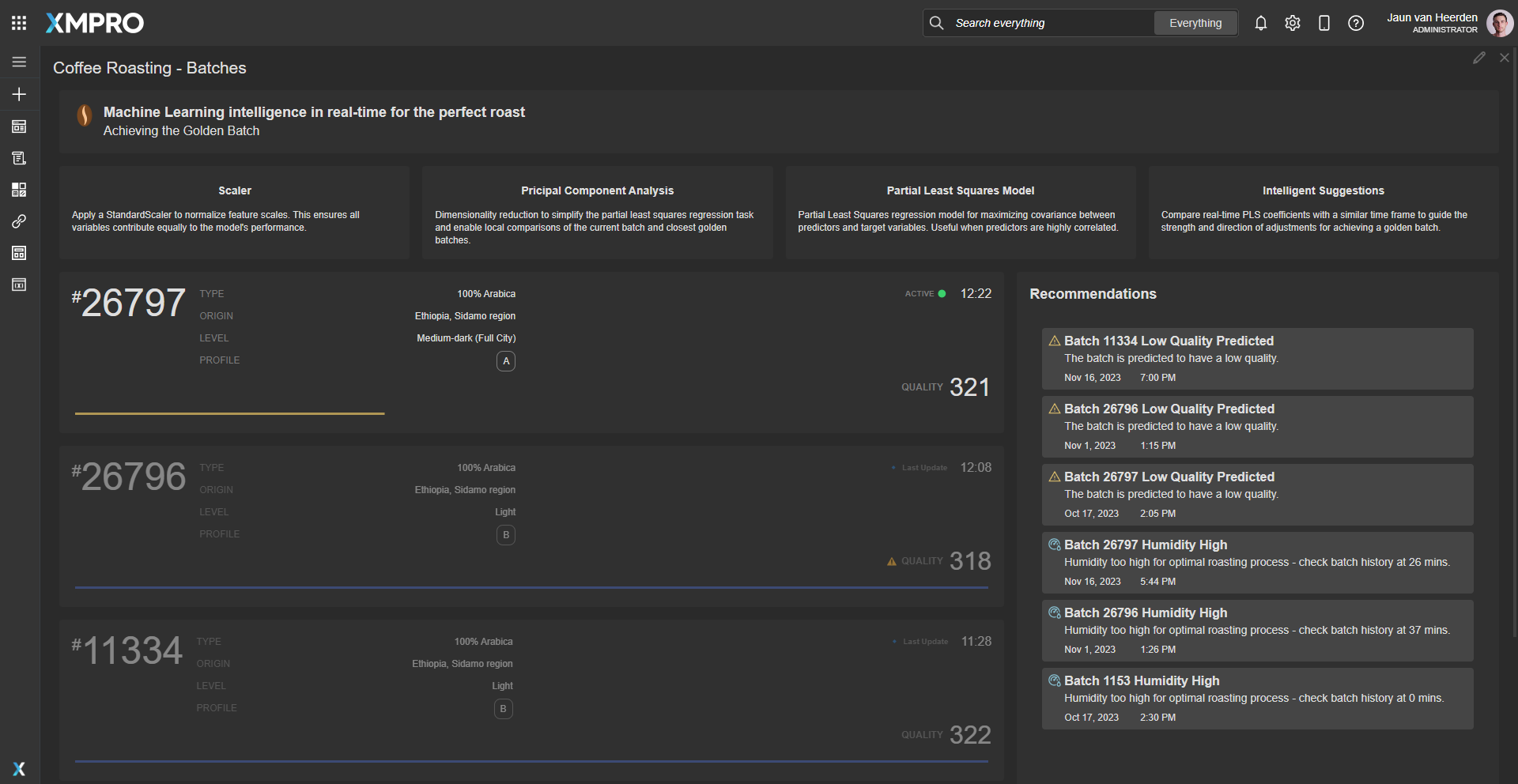

An overview of the current and previous batch processes that drill down to individual batches. The real-time monitoring of the batch provides real-time data and status of the process including intelligent suggestions to steer the quality towards a golden batch signature. The application is configured using:

Landing Page [1.0 Batches]

| Block | Description |

|---|---|

| Linear Gauge | To visualize the batch progress |

| Recommendations | To view current open recommendations for all batches |

| Indicator | To visually indicate the active batch |

Drilldown [2.0 Batch]

| Block | Description |

|---|---|

| Linear Gauge | To visualize the batch progress |

| D3 | A visualization to show the live temperature values |

| Sparkline | A sparkline to indicate the rate of change for quality |

| Chart | To display the live operational data |

| Recommendations | To view current open recommendations for the batch |

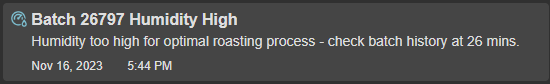

Recommendations

The recommendation is configured using two rules across one recommendation:

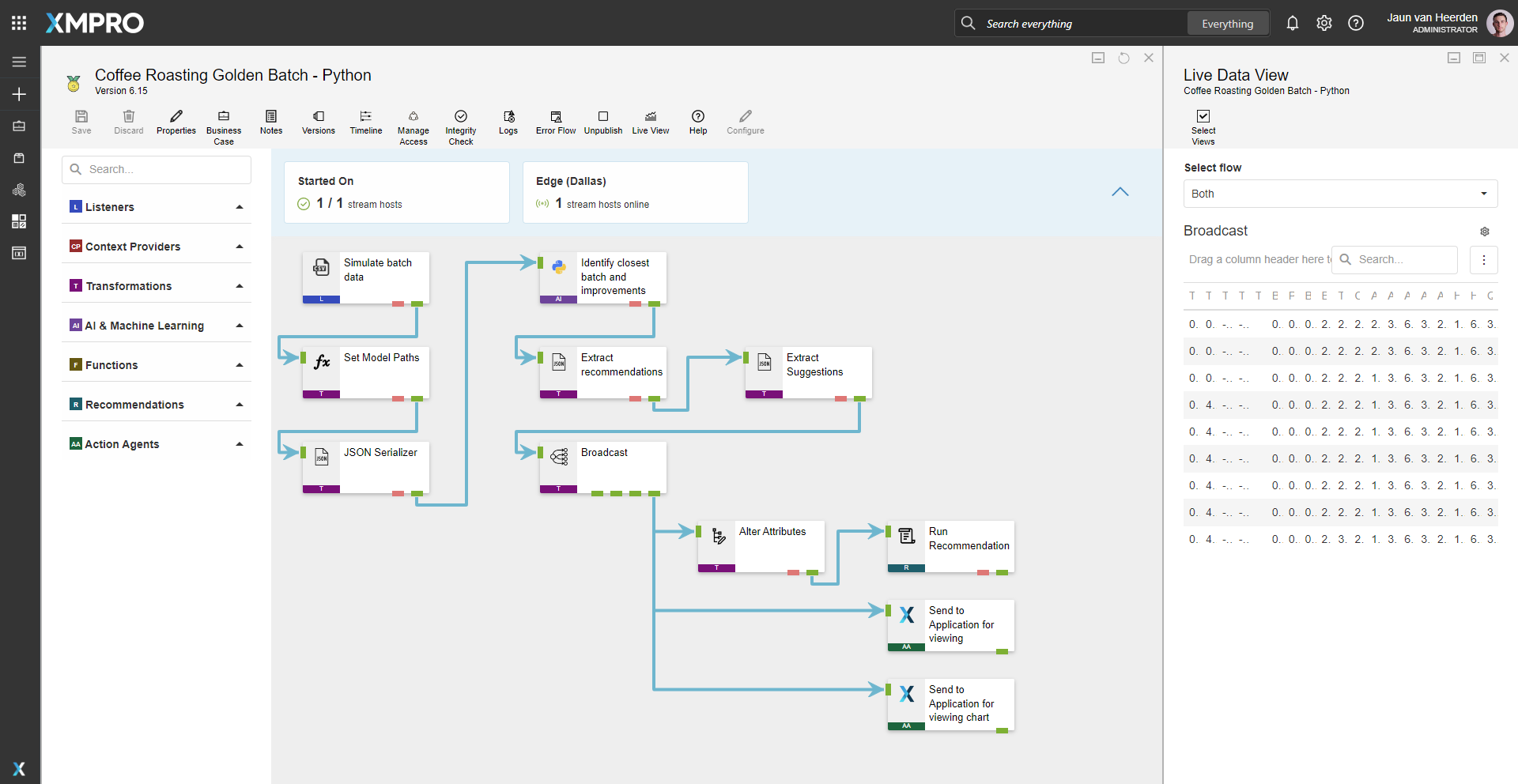

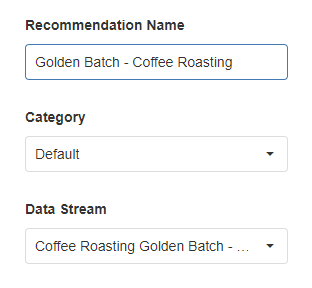

Data Stream

An example of how to contextualize simulated data, predict batch quality, receive intelligent suggestions, run recommendations and output the batch roaster data to the Application Designer. The data stream is configured using:

| Agent | Description |

|---|---|

| CSV Listener | Simulate the batch data |

| Calculated Field | Setting the model paths dynamically |

| JSON Serializer | Package the data into a JSON object |

| Python | Run the Golden Batch model |

| JSON Deserializer | Unpack the results |

| Alter Attributes | Unpack the intelligent suggestions |

| Broadcast | Broadcast data to other agents |

| XMPro App | View data in the App Designer |

| Run Recommendation | Pass the data to the Recommendation engine to evaluate |

Steps to Import

1. Create/confirm variables

Ensure the following variables are available to be used in the data stream:

- App Designer URL

- App Designer Integration Key (Encrypted)

2. Run the XMPro Notebooks

- Run the first Notebook to prepare the data with expected output:

roaster.csv&clean.parquetindatafolder

- Run the second Notebook to develop the machine learning model with expected output:

gb_pca.sav,gb_pls.sav&gb_scaler.saveinmodelsfolder

- Save model files in location that is accessible by the Stream Host

Ensure to use the same version Python libraries in the Stream Host as what is ran in the notebooks to generate the models - for this example

scikit-learn 1.6.1was used.

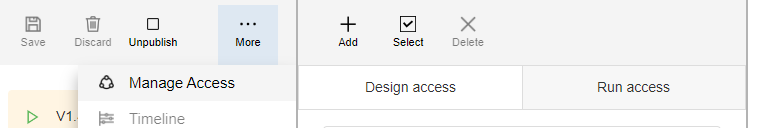

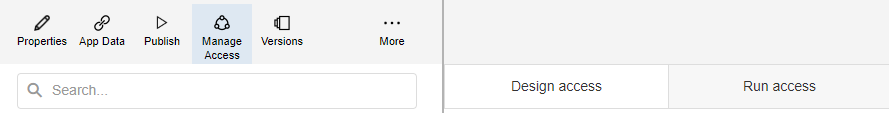

3. Import the Data Stream

- Select the highest agent version number on import, if prompted

- Assign Access to others as required

- XMPro agents (

) - ensure the URL & Integration Key are selected

) - ensure the URL & Integration Key are selected - Recommendation agent (

) - ensure the URL & Integration Key are selected

) - ensure the URL & Integration Key are selected - Calculated Field (

) - ensure the correct model file paths are configured

- Python agent (

) - ensure the correct Python version is selected, a stream host has access to a Python runtime, the paths are set and the script is applied.

OR

) - ensure the correct Python version is selected, a stream host has access to a Python runtime, the paths are set and the script is applied.

OR - MLFLow agent (

) - ensure the correct model version is selected and the stream host has access to the MLFlow server.

) - ensure the correct model version is selected and the stream host has access to the MLFlow server. - Click Apply and save the data stream

- Publish the data stream and open the live view

- Ensure there is data in the live view by monitoring the agents

4. Import the Recommendations

- Map the data stream to import

- Assign Access to others as required

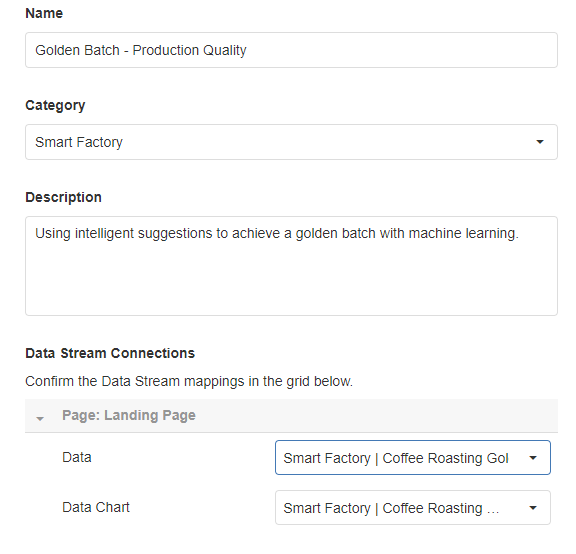

5. Import the Application

-

Map the data source on import:

- Landing Page:

Data Source Name Data Stream Agent Option Data Coffee Roasting Golden Batch - Python/MLFlow Send to Application for viewing Data Chart Coffee Roasting Golden Batch - Python/MLFlow Send to Application for viewing chart - Assign Access to others as required

- Ensure the App Data connection properties are configured and valid

-

Edit the application to link the recommendations (Select Golden Batch - Coffee Roasting in Block Properties under Behavior)

Page Location 1.0 Batches Bottom Right 2.0 Batch Bottom Right - Save the application

- Publish the application

- Ensure there is data in the application and that the Unity model is receiving its data by hovering over and observing the values